Upload to Amazon S3 from Dropbox using Hazel

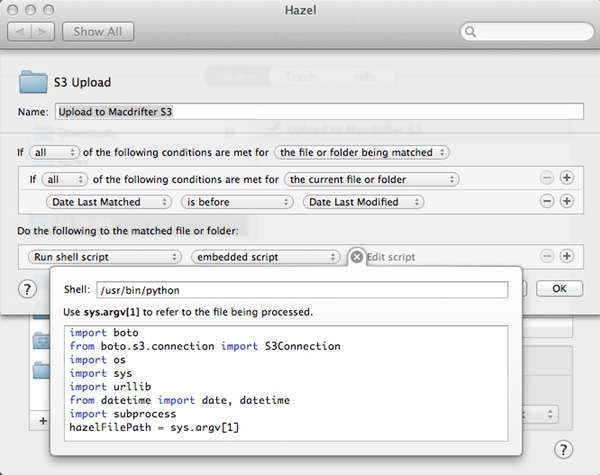

I've been fiddling with my Hazel Dropbox to FTP rule lately. But in the middle of it, I received a polite prompt to make it work with Amazon S3 instead. I'm a sucker for learning something new so I made this Python based Hazel rule.

I Installed the boto module for working with Amazon AWS. This is a mature library of methods for doing all sorts of stuff with Amazon AWS. I really only needed a few methods though.

:::bash

sudo easy_install boto

Then this script goes into a new Hazel rule.

:::python

import boto

from boto.s3.connection import S3Connection

import os

import sys

import urllib

from datetime import date, datetime

import subprocess

# This is how Hazel passes in the file path

hazelFilePath = sys.argv[1]

# Obviously, you'll need your own keys

aws_key = 'AWESOME_KEY'

aws_secret = 'SUPER_SECRET_KEY'

# This is where I store my log file for these links. It's a Dropbox file in my NVAlt notes folder

logFilePath = "/Users/weatherh/Dropbox/Notes/Linkin_Logs.txt"

nowTime = str(datetime.now())

# Method to add to clipboard

def setClipboardData(data):

p = subprocess.Popen(['pbcopy'], stdin=subprocess.PIPE)

p.stdin.write(data)

p.stdin.close()

retcode = p.wait()

# This is the method that does all of the uploading and writing to the log file.

# The method is generic enough to work with any S3 bucket that is passed.

def uploadToS3(localFilePath, S3Bucket):

fileName = os.path.basename(localFilePath)

# Determine the current month and year to create the upload path

today = date.today()

datePath = today.strftime("/%Y/%m/")

# Create the URL for the image

imageLink = 'http://'+urllib.quote(S3Bucket+datePath+fileName)

# Connect to S3

s3 = S3Connection(aws_key, aws_secret)

bucket = s3.get_bucket(S3Bucket)

key = bucket.new_key(datePath+'/'+fileName)

key.set_contents_from_filename(localFilePath)

key.set_acl('public-read')

logfile = open(logFilePath, "a")

try:

# %% encode the file name and append the URL to the log file

logfile.write(nowTime+' '+imageLink+'\n')

setClipboardData(imageLink)

finally:

logfile.close()

# How's this for terrible design. The actual body of the script is one line. I'm my own worst enemy.

uploadToS3(hazelFilePath, 'media.macdrifter.com')

This is very similar to the FTP rule. I add a file to a folder and Hazel will upload it to the designated S3 account and add a link to my Link Log file in NVAlt and my clipboard stack. Since the folder I use is in Dropbox, I can add an image or video right to Dropbox and get back an Amazon S3 link.

The Link log looks something like this:

Notes

My S3 bucket name is "media.macdrifter.com" so that I could use it as a subdomain for this site.

I structured the bucket folders like they are in my WordPress content directory. They exist as YEAR/MO, for example "2012/05". I added a couple of extra lines to get this structure for me. If the folder does not already exist, the new_key method will create it for me.

If a file already exists with the same name as the one being uploaded, this script will overwrite it without warning. You've been warned.

It's actually convenient that files will be overwritten. It means I can browse to the Dropbox folder that Hazel is watching and edit the image directly. Any saved changes are immediately pushed up to Amazon S3. It's like directly editing images on S3 (with Acorn of course)

I don't really use Amazon S3 for image hosting. I have no problem hosting the images myself. This was just something that struck me as interesting and someone else might find it useful.

I was quite impressed with the Boto module's ease of use. Amazon's S3 was also fairly easy to get going with. Configuring the CName on my DNS was the hardest part of the entire process. If I didn't care about having a nice looking URL for the hosted images, I could have skipped the CName and gotten on with wasting my time in some other way. I like the nice looking URL's though.